Just recently my colleagues presented this idea about Tester 2.0. Tapani Aaltio even gave an interview about the subject in EuroStar TV. Go Tapsa!

In short Tester 2.0 integrates itself more deeply into the SDLC, understands it better and creates more value this way while Tester 1.0 just does it's job, doesn't collaborate, pretty much stays put and tests. On this level I'm all for Tester 2.0, but what worries me the most is that in the dark side of all this lies the will to make us all developers...

So let's talk about Tester 3.0.

To develop Tester 3.0, we must go deeper into the ideal of Tester 2.0. As said, Tester 2.0 collaborates, cooperates, participates on the whole lenght of SDLC and considers user and stakeholder needs. Tester 2.0 is involved from day one and is an active and proactive member since. It's quite hard to feel bad about this. But at the same time development collaboration is emphasized above everything else. Tester 2.0 should sit among developers, start to understand development more deeply, perhaps read/write some code, even think like developer.

The leading trend in software testing industry is that tester should know how to code. Many companies won't even hire you if you don't know how to code. People like Alberto Savoia give slap-in-the-face presentations about the death of testing, etc. All in all, the message is that if you don't know how to code as a tester, your future is quite grim.

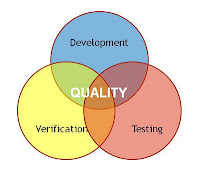

|

| The Holy Trinity |

“Testing is a quest within a vast, complex, changing space. We seek bugs. It is not the process of demonstrating that the product CAN work, but exploring if it WILL.”In Verification & Validation testing is incorporated in both and is considered to prove something, which is just wrong. I my previous posts I've ranted about the complex nature of testing and the impossibility to prove anything via it, so I won't repeat myself here. Bottom line; When talking about V&V, don't talk about testing.

As I deframed V&V, my definition of verification might need some explaining... For me verification is all that tries to prove that the something development has done, has been done right. For example when training a boxer, development teaches it to hit, dodge, move, etc. while verification looks when it hits, dodges, moves, etc. and gives feedback if something is not done as it should be. Testing is eventually the training opponent that might do anything to see if it could survive against a real opponent. Coach? Quality management.

Brace yourself; Running scripted test cases, regression testing, applying technical testing like unit testing, test automation (with certain exceptions), etc. are verification to me. You have to apply certain kind of thinking when doing that, namely development-like thinking. You try to achieve something, make something ready and sell it. You have a goal and you pursue it relentlessly. That is something a tester should not do. Of course tester has deadlines and goals like the rest of us, but the ultimate goal for tester is to explore the software and see if it works (Bach statement recap). Developer goes for the goal and reports about something ready. Tester enjoys the ride and reports about the journey to the goal.

The goal and the growth to it. Think about it.

Numerous times I have been a tester or test manager at business side and even more I've stood at the entry gate where vendors try to deliver software or system to customer. The main thing that separates good deliveries from the bad are vendors' ability to report about their doing, their growth to the moment they are delivering something. E.g. Scrum teams' success culminates on their performance at the demo, but so very often as the software or system lives through that brief moment, it is steaming pile of shit when put to wild environment with other systems. And why? Lack of quality culture and because even though they had people titled as testers, no one thought like a tester. No one was interested in making a growth story which could reveal so much to those who are accepting the delivery. When I want to know about quality I don't want to hear the good news; I want to hear the bad news that have grown into good, about challenges and how they were beaten.

Tester 2.0 thinks like a developer. Tester 3.0 thinks like a tester.

|

| A swing |

Tester 2.0 thinks like a developer. Tester 3.0 thinks like a tester.

Someone (was it James Whittaker?) once said that developers are just engineers lost in implementation. Testers thinking like developers might fall into that very same trap. Development can be insanely difficult task and by all means should be supported by testing, but without risking the thought process of a tester or relationship to other, very likely more important stakeholders. Evenly divided cooperation is the key, not just sitting with those who's handywork you're not only to support, but to question too.

The main role of a software tester is to test software, but that's pretty much the definition of Tester 1.0. Tester 2.0 is something more, but this strong direction to development is dangerous as I tried to point out in this post. Tester 3.0 is still only one version more on the road to Tester N; It's contribution to this path is more refined collaboration with stakeholders and understanding of, not only the whole SDLC, but everything affecting it. While development and it's verification gain influence from computer sciences, testing finds flaws in it via psychology, anthropology, even philosophy if you will. The thinking process is different and should be nourished as such. Only by being different testing can truly benefit development.

Deep huh? Well, that's Tester 3.0. ;)

Our quote today defines the very essence of this blog post:

“Problems cannot be solved by the same level of thinking that created them.” -Albert EinsteinYours truly,

Sami "Tester 2.9" Söderblom

And about performance testing... I like the idea that performance tests are executed nightly when developing the application. But the fact is that this is often more like performance measurement with some amount of load. Proper performance testing (in my opinion) tries to find the potential bottlenecks in the application or the whole system running the application. This usually means that you have to execute different kinds of scenarios (stress, load, endurance) + have proper monitoring and analysis active while you do it. How do you fit all this in a Scrum project, for example?

ReplyDeleteOh yeah... if some project is a Scrum project and something is not good then no problem... since the sprints last only two weeks the new release will be out quite soon. This basically means that no one has to think of quality since it will just "happen" at some point. To me this sounds like sloppy busy-work and I hope they never build aeroplanes like this.

One thing that also annoys me a bit is the fact that "there should be no quality sprints... because they are not needed!". If some application is done in agile way and it has severe performance bottlenecks when it is deployed to production (and when it actually interacts with some other applications and services that are not so agile) then how this problem is solved in an agile way? Just buy more hardware because it's cheap?

---

non-related example: band is making a record in the studio

case 1: band has a producer who is never completely satisfied with the band's performance and squeezes everything from the band members. It's a hard work but finally the band makes an excellent record.

case 2: the same band makes their second record. Now they have more money and freedom and they don't want the annoying producer anymore - they want to produce the new record by themselves! And the result is that the record is sloppy and it sucks

case 3: the band accepts the fact the their 2nd record sucked and now they are prepared to work extra hard to make a good 3rd record. They hire the producer back who promises to communicate more with the band and be less of a party pooper. So the project starts again and everybody starts to work with a completely new level of artistic performance. The 3rd record is good again and the band is back on the top!

So, what we have learned?

tester 1.0 was like the producer, maybe a bit of a stiff spoilsport but completed the work

tester 2.0 was the "a bit snooty" group of people who had a bit of a renaissance-spririt

tester 3.0 is kinda like tester 1.0 and tester 2.0 combined - a bit more agile than the good ol' tester 1.0 but less of a developer than tester 2.0 (= still thinks like a tester).

---

Probably I now get the "paskajätkä"-label from all the "tester 2.0"-testers but I still say that I have nothing against Scrum or agile BUT when it becomes like a religion it starts to sound a bit weird. I think that a good tester is supposed to question everything since - isn't that kinda of our core competence?!

And sorry about the typos...

Deletethe previous rants were written in a very agile mode and the quality was not in the list. :P

"Good god!" -James Brown :)

ReplyDeleteNiiiice comment! You should join in TestausOSY's seminar about agile testing on Thursday (http://testausosy.ttlry.fi/j%C3%A4senseminaari-testausta-ketter%C3%A4sti-helsinki-222012-klo-15). There this subject is taken from very confined and neat environment to gigantic pension insurance environment. If they really have pulled it off by applying pure Scrum and not Scrumbut, this show might be worth listening for. And by "pulling off" I mean taking real responsibility over quality.

In the mean time, you should start a blog too and write about Tester 4.0. I think you might be the only one qualified to do so! :)

@Nixon. Somehow I got your comments to my email, but they are not here (too long message error, etc.) Seems that also Blogspot suffers from development-driven thinking... :)

ReplyDeleteBut I try to post them here. Here's the first part:

"Few words about tester 2.0 and (fr)agile. Always, when I have doubts about anything agile-ish I always get the same unresponsive response from the agile-believers... "you can't say anything against agile! It solves all our problems... capiche?!"

Before I say anything else I have to emphasize the fact that I am not saying that being agile is crap and the good old V-model is the way to go. I like the idea of Scrum, for example, BUT I also accept the fact that sometimes the situation is such that you can't use Scrum that easily (those who beg to differ with me... welcome to insurance and banking where you have to deal with z/os mainframes, cobol-stuff that has been written in 70's and environments that are filled with dependencies to 1 000 000 million different services etc etc...).

Back to tester 2.0 who is deployed to a (fr)agile project. Ok, this tester knows some java and other cool programming languages like python etc. He/She starts writing awesome test automation that will tackle all the defects that have been found during the sprint. Everything goes fine in the final demo and people are clapping their hands. Then this application is released to production. Some annoyed tester 1.0 wants to see the new application in production and tries a few tester tricks to it... and BANG! The application is broken. Why?

I tell you why... it's because "there are no roles in a Scrum-team" and when tester comes to the project he is just a developer who DEVELOPES automated tests by using JUnit, Selenium, Webdriver and maybe even Jmeter or grinder if he is a performance-oriented professional. So, it seems that tester 2.0 is not a tester but a automated test developer - a developer who mostly writes tests. :)

In most places the thing is this. Developers want to go somewhere as fast as possible. A tester wants to take photos and see the places, explore and stuff. Like being a tourist (who doesn't just stay beside the hotel pool and get drunk :D). So, what is this tester 2.0... tester 2.0 is a developer in tester's clothing :D - OR it's kinda like developer's "plot" of getting rid of testers :D. Sorry if this sounds a bit sarcastic but this is just a "thought experiment"."

The the second part:

ReplyDelete"Example case:

When project starts everybody just wants to create rather than create and fix. But a project without any testers sounds like a bad idea, so one developer calls himself a tester and off they go! :) Now the project actually has a bunch of developers but one developer was smuggled in wearing testers' clothes. :) So what happens next. Project starts and there are 2-week sprints that end up in a demo. All the developers and the tester 2.0 are working well together and the demo is successful. Everybody is happy. What is the problem then?

The potential problem is this: the demo is quite fast and the application CAN look good during the demo. Also the application might be created very well technical-wise but the problem is that the developers are usually much more "clever" than "normal" people. And developer's usually even can't think like a "normal" person. Normal person might do something totally "stupid" that a tester 1.0 noticed when he was testing the application."

@Nixon

ReplyDeleteLike the great warrior poet Snoop Dogg would put it: "Fo shizzle!!" That's exactly what I'm afraid of when breeding Tester 2.0s. You pointed out that Tester 3.0 is a combination of Tester 1.0 and Tester 2.0, but I'd like to think 3.0 to be just an update. Tester 2.0 is a tester who has the tools of a developer, but who unfortunately thinks like a developer too. Tester 3.0 has the tools of a developer (not necessary though), but who thinks like a tester, which is actually more important than learning just the technical stuff.

Now Nixon, I - among numerous others - have some insight on what you're capable of doing technically and some of it is truly remarkable, but what amazes me the most is that at the same time you're able to think like a true tester. That actually makes you, not Tester 3.0, but Tester 4.0; A kick-ass tester who's more proficient in actual development than developers themselves! :)

When the industry starts to wake up, it realizes that it's not the testers that are about to be extinct; It's the developers! They are about to be replaced by Tester 4.0s! ;)

Gerald Weinberg put it better than I ever could:

ReplyDeletehttp://blog.utest.com/testing-the-limits-with-gerald-weinberg/2012/03/?ls=Newsletter&mc=March_2012